3. Land Cover Mapping¶

In this tutorial, we will use the overlaid points (see Overlay tutorial) to train a ML-model and predict the land cover (LC) in the last two decades, using the LandMapper class.

The training step will use elevation, slope, landsat (7 spectral bands, 4 seasons and 3 percentiles per year) and night light (VIIRS Night Band) data to predict the follow LC classes:

231: Pastures,

312: Coniferous forest,

321: Natural grasslands,

322: Moors and heathland,

324: Transitional woodland-shrub,

332: Bare rocks,

333: Sparsely vegetated areas,

335: Glaciers and perpetual snow.

First, let’s import the necessary modules

[1]:

# To work with local eumap code

# import sys

# sys.path.append('../../')

import os

import gdal

from pathlib import Path

import pandas as pd

import geopandas as gpd

from eumap.mapper import LandMapper

import warnings

warnings.filterwarnings('ignore')

3.1. Dataset¶

Our dataset refers to one tile, located in Switzerland, extracted from a tiling system created for Continental European (7,042 tiles) by GeoHarmonizer Project.

[2]:

from eumap import datasets

tile = datasets.pilot.TILES[0]

#datasets.get_data(tile+'_rasters_gapfilled.tar.gz')

data_root = datasets.DATA_ROOT_NAME

data_dir = Path(os.getcwd()).joinpath(data_root,tile)

print(f'data_dir = {data_dir}')

data_dir = /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland

Let’s load the overlaid points

[3]:

fn_points = Path(os.getcwd()).joinpath(data_dir, tile + '_landcover_samples_overlayed.gpkg')

points = gpd.read_file(fn_points)

points[points.columns[0:12]]

[3]:

| lucas | survey_date | confidence | tile_id | lc_class | overlay_id | dtm_elevation | dtm_slope | landsat_ard_fall_blue_p25 | landsat_ard_fall_nir_p25 | landsat_ard_fall_green_p25 | landsat_ard_fall_green_p75 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | False | 2006-06-30T00:00:00 | 85 | 10636 | 321 | 1 | 1948.0 | 36.313705 | 5.0 | 65.0 | 16.0 | 17.0 |

| 1 | False | 2006-06-30T00:00:00 | 85 | 10636 | 321 | 2 | 2209.0 | 7.917305 | 5.0 | 76.0 | 17.0 | 18.0 |

| 2 | False | 2006-06-30T00:00:00 | 85 | 10636 | 321 | 3 | 1990.0 | 32.722038 | 4.0 | 69.0 | 12.0 | 14.0 |

| 3 | False | 2006-06-30T00:00:00 | 85 | 10636 | 322 | 4 | 2142.0 | 49.800537 | 7.0 | 16.0 | 4.0 | 4.0 |

| 4 | False | 2006-06-30T00:00:00 | 85 | 10636 | 332 | 5 | 2420.0 | 27.018671 | 16.0 | 66.0 | 29.0 | 31.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1119 | False | 2000-06-30T00:00:00 | 85 | 10636 | 312 | 277 | 1729.0 | 16.108473 | 6.0 | 55.0 | 15.0 | 16.0 |

| 1120 | False | 2000-06-30T00:00:00 | 85 | 10636 | 332 | 278 | 2562.0 | 31.661921 | 7.0 | 25.0 | 11.0 | 26.0 |

| 1121 | False | 2000-06-30T00:00:00 | 85 | 10636 | 321 | 279 | 2174.0 | 15.649096 | 5.0 | 77.0 | 15.0 | 17.0 |

| 1122 | False | 2000-06-30T00:00:00 | 85 | 10636 | 333 | 280 | 2368.0 | 21.605083 | 28.0 | 62.0 | 38.0 | 40.0 |

| 1123 | False | 2000-06-30T00:00:00 | 85 | 10636 | 322 | 281 | 900.0 | 27.319332 | 13.0 | 70.0 | 24.0 | 25.0 |

1124 rows × 12 columns

What are the columns avaiable to the ML-model ?

[4]:

print("Columns:")

columns = []

for col_name, col_type in zip(points.columns, points.dtypes):

print(f' - {col_name} ({col_type})')

Columns:

- lucas (bool)

- survey_date (object)

- confidence (int64)

- tile_id (int64)

- lc_class (int64)

- overlay_id (int64)

- dtm_elevation (float64)

- dtm_slope (float64)

- landsat_ard_fall_blue_p25 (float64)

- landsat_ard_fall_nir_p25 (float64)

- landsat_ard_fall_green_p25 (float64)

- landsat_ard_fall_green_p75 (float64)

- landsat_ard_fall_blue_p75 (float64)

- landsat_ard_fall_swir1_p25 (float64)

- landsat_ard_fall_red_p75 (float64)

- landsat_ard_fall_nir_p50 (float64)

- landsat_ard_fall_red_p25 (float64)

- landsat_ard_fall_nir_p75 (float64)

- landsat_ard_fall_thermal_p25 (float64)

- landsat_ard_fall_swir2_p50 (float64)

- landsat_ard_fall_blue_p50 (float64)

- landsat_ard_fall_red_p50 (float64)

- landsat_ard_fall_green_p50 (float64)

- landsat_ard_fall_swir2_p25 (float64)

- landsat_ard_fall_thermal_p75 (float64)

- landsat_ard_spring_nir_p25 (float64)

- landsat_ard_spring_blue_p50 (float64)

- landsat_ard_spring_blue_p25 (float64)

- landsat_ard_spring_blue_p75 (float64)

- landsat_ard_fall_thermal_p50 (float64)

- landsat_ard_fall_swir1_p50 (float64)

- landsat_ard_fall_swir1_p75 (float64)

- landsat_ard_fall_swir2_p75 (float64)

- landsat_ard_spring_nir_p50 (float64)

- landsat_ard_spring_green_p75 (float64)

- landsat_ard_spring_red_p25 (float64)

- landsat_ard_spring_green_p50 (float64)

- landsat_ard_spring_nir_p75 (float64)

- landsat_ard_spring_green_p25 (float64)

- landsat_ard_spring_red_p50 (float64)

- landsat_ard_spring_red_p75 (float64)

- landsat_ard_summer_blue_p25 (float64)

- landsat_ard_spring_swir1_p50 (float64)

- landsat_ard_spring_swir1_p25 (float64)

- landsat_ard_spring_thermal_p75 (float64)

- landsat_ard_spring_thermal_p25 (float64)

- landsat_ard_spring_swir1_p75 (float64)

- landsat_ard_summer_green_p25 (float64)

- landsat_ard_spring_thermal_p50 (float64)

- landsat_ard_spring_swir2_p25 (float64)

- landsat_ard_summer_red_p50 (float64)

- landsat_ard_spring_swir2_p75 (float64)

- landsat_ard_summer_blue_p50 (float64)

- landsat_ard_spring_swir2_p50 (float64)

- landsat_ard_summer_nir_p25 (float64)

- landsat_ard_summer_green_p50 (float64)

- landsat_ard_summer_blue_p75 (float64)

- landsat_ard_summer_red_p75 (float64)

- landsat_ard_summer_swir1_p50 (float64)

- landsat_ard_summer_swir2_p75 (float64)

- landsat_ard_summer_swir2_p50 (float64)

- landsat_ard_summer_red_p25 (float64)

- landsat_ard_summer_green_p75 (float64)

- landsat_ard_summer_nir_p75 (float64)

- landsat_ard_summer_thermal_p75 (float64)

- landsat_ard_summer_swir2_p25 (float64)

- landsat_ard_summer_swir1_p25 (float64)

- landsat_ard_summer_nir_p50 (float64)

- landsat_ard_summer_thermal_p50 (float64)

- landsat_ard_summer_swir1_p75 (float64)

- landsat_ard_summer_thermal_p25 (float64)

- landsat_ard_winter_blue_p25 (float64)

- night_lights (float64)

- landsat_ard_winter_green_p25 (float64)

- landsat_ard_winter_green_p50 (float64)

- landsat_ard_winter_blue_p75 (float64)

- landsat_ard_winter_green_p75 (float64)

- landsat_ard_winter_red_p25 (float64)

- landsat_ard_winter_nir_p50 (float64)

- landsat_ard_winter_swir2_p50 (float64)

- landsat_ard_winter_blue_p50 (float64)

- landsat_ard_winter_red_p75 (float64)

- landsat_ard_winter_nir_p75 (float64)

- landsat_ard_winter_swir2_p75 (float64)

- landsat_ard_winter_red_p50 (float64)

- landsat_ard_winter_swir1_p50 (float64)

- landsat_ard_winter_swir1_p75 (float64)

- landsat_ard_winter_thermal_p50 (float64)

- landsat_ard_winter_swir1_p25 (float64)

- landsat_ard_winter_thermal_p25 (float64)

- landsat_ard_winter_nir_p25 (float64)

- landsat_ard_winter_swir2_p25 (float64)

- landsat_ard_winter_thermal_p75 (float64)

- geometry (geometry)

3.2. Training¶

To map the land cover we will use the LandMapper class, which will train a ML-model and do spacetime predictions for diferent years. This class will receive a model implementation compatible with sklearn (e.g. RandomForest, SVC, KerasClassifier, XGBClassifier), and a BaseSearchCV implementation to find the best hyperparamenter for the specified model. For the hyperparameter optimization it’s possible to select diferent scoring metrics and hyperparameter range values. In this example we will use the f1-score, that is basically a weighted average between the precision and recall. It’s possible use other evaluation metrics modifing ou creating a new python function, that will be passed as parameter for the LandMapper class.

In the remote sensing area the precision was renamed to Producer’s Accuracy (the producer of the classification is interested in understand how well a specific area on Earth can be mapped) and the recall was renamed to User’s Accuracy (the user of classification is interested in check how well the map represents what is really on the ground) (Story, 1986).

[5]:

from sklearn.metrics import f1_score

def f1_scorer(clf, X, y_true):

y_pred = clf.predict(X)

error = f1_score(y_true, y_pred, average='weighted')

return error

[6]:

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

estimator = RandomForestClassifier(n_estimators=100)

hyperpar = GridSearchCV(

estimator = estimator,

scoring = f1_scorer,

param_grid = {

'max_depth': [5, None],

'max_features': [0.5, None]

}

)

The evaluation metric will be calculated using a cross validation strategy, using diferente parts of samples to train and validate the model. Here we will use a 5-fold cv, but others validation strategies are also supported by LandMapper class.

The LandMapper receives the follow parameters:

points: the geopackage filepath or GeoPandas DataFrame instance,

feat_col_prfxs: the prefix of all columns that should be included as covariates in the feature space,

target_col: the name of the column that should be considered as the target variable by the model,

estimator: the model implementation, which could be any one available in the sklearn,

hyperpar_selection: the hyperparameter optimization implementation, which could be any one available in the sklearn,

cv: the cross-validation strategy according to sklearn,

min_samples_per_class: the minimum sample proportion per class (all the classes with less than 5% of samples will be removed from the training),

verbose: show debug information about the train and prediction steps.

[7]:

feat_col_prfxs = ['landsat', 'dtm', 'night_lights']

target_col = 'lc_class'

min_samples_per_class = 0.05

cv = 5

landmapper = LandMapper(points=points,

feat_col_prfxs = feat_col_prfxs,

target_col = target_col,

estimator = estimator,

hyperpar_selection = hyperpar,

cv = cv,

min_samples_per_class=min_samples_per_class,

verbose = True)

[15:20:41] Removing 74 samples [lc_class in ([])] due min_samples_per_class condition (< {min_samples_per_class})

[15:20:41] Transforming lc_class:

[15:20:41] -Original classes: [231. 312. 321. 322. 324. 332. 333. 335.]

[15:20:41] -Transformed classes: [0 1 2 3 4 5 6 7]

Let’s optimize and train the model:

[8]:

landmapper.train()

[15:20:41] Optimizing hyperparameters for RandomForestClassifier

[15:21:18] -0.60722 (+/-0.07412) from {'max_depth': 5, 'max_features': 0.5}

[15:21:18] -0.60963 (+/-0.07181) from {'max_depth': 5, 'max_features': None}

[15:21:18] -0.72191 (+/-0.06259) from {'max_depth': None, 'max_features': 0.5}

[15:21:18] -0.71507 (+/-0.07226) from {'max_depth': None, 'max_features': None}

[15:21:18] Best: 0.72191 using {'max_depth': None, 'max_features': 0.5}

[15:21:18] Calculating evaluation metrics

[Parallel(n_jobs=1)]: Using backend SequentialBackend with 1 concurrent workers.

[15:21:27] Training RandomForestClassifier using all samples

[Parallel(n_jobs=1)]: Done 5 out of 5 | elapsed: 9.3s finished

Let’s understand what happened here: 1. Different combinations of hyperparameters were evaluated 2. The best one was chosen and all the samples were cross validated to derive other classification metrics 3. A final model were trained using all the samples, without cv. This model will be used to classify the land-cover in future prediction

We can check other classification metrics for the best classification models

[9]:

print(f'Overall accuracy: {landmapper.eval_metrics["overall_acc"] * 100:.2f}%\n\n')

print(landmapper.eval_report)

Overall accuracy: 72.95%

precision recall f1-score support

0 0.72 0.76 0.74 66

1 0.69 0.81 0.74 199

2 0.69 0.72 0.71 166

3 0.61 0.57 0.59 116

4 0.43 0.19 0.26 84

5 0.80 0.70 0.75 101

6 0.80 0.85 0.82 229

7 0.93 0.97 0.95 89

accuracy 0.73 1050

macro avg 0.71 0.70 0.70 1050

weighted avg 0.72 0.73 0.72 1050

… and the complete confusion matrix:

[10]:

from sklearn.metrics import ConfusionMatrixDisplay

labels = [

'231: Pastures',

'312: Coniferous forest',

'321: Natural grasslands',

'322: Moors and heathland',

'324: Transitional woodland-shrub',

'332: Bare rocks',

'333: Sparsely vegetated areas',

'335: Glaciers and perpetual snow'

]

estimator = landmapper.estimator_list[0]

print("Verifing the label order:")

for label, cl in zip(labels, estimator.classes_):

print(f' - {cl:.0f} => {label}')

print("\n\nConfusion Matrix:")

confusion_matrix = landmapper.eval_metrics['confusion_matrix']

disp = ConfusionMatrixDisplay(confusion_matrix = confusion_matrix, display_labels = labels)

disp.plot(cmap='Blues', xticks_rotation='vertical')

Verifing the label order:

- 0 => 231: Pastures

- 1 => 312: Coniferous forest

- 2 => 321: Natural grasslands

- 3 => 322: Moors and heathland

- 4 => 324: Transitional woodland-shrub

- 5 => 332: Bare rocks

- 6 => 333: Sparsely vegetated areas

- 7 => 335: Glaciers and perpetual snow

Confusion Matrix:

[10]:

<sklearn.metrics._plot.confusion_matrix.ConfusionMatrixDisplay at 0x7ff325835040>

You can also can access the raw cv results:

[11]:

pd.DataFrame({

'Expected LC-class':landmapper.target,

'Predict LC-class': landmapper.eval_pred}

)

[11]:

| Expected LC-class | Predict LC-class | |

|---|---|---|

| 0 | 2 | 2 |

| 1 | 2 | 2 |

| 2 | 2 | 2 |

| 3 | 3 | 3 |

| 4 | 5 | 6 |

| ... | ... | ... |

| 1045 | 1 | 1 |

| 1046 | 5 | 6 |

| 1047 | 2 | 2 |

| 1048 | 6 | 6 |

| 1049 | 3 | 6 |

1050 rows × 2 columns

3.3. Predictions¶

Now we are ready to run the predictions. To do it the LandMapper needs receive as parameter:

dirs_layers: a list containing diferent folders that store the same raster layers used in the spacetive overlay and training phase,

fn_output: the file path to write the model output as geotiff.

First, let’s predict only the year of 2000:

[12]:

dir_timeless_layers = os.path.join(data_dir, 'timeless')

dir_2000_layers = os.path.join(data_dir, '2000')

dirs_layers = [dir_2000_layers, dir_timeless_layers]

fn_output = os.path.join(data_dir, 'land_cover_2000.tif')

output_fn_files = landmapper.predict(dirs_layers=dirs_layers, fn_output=fn_output, allow_additional_layers=True)

print('Output files:')

for output_fn_file in output_fn_files:

print(f' - {output_fn_file}')

[15:21:30] Reading 87 raster files using 4 workers

[15:21:36] Executing RandomForestClassifier

[15:21:54] RandomForestClassifier prediction time: 18.05 segs

Output files:

- /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland/land_cover_2000.tif

Now we are ready to predict multiple years, creating a list of dirs_layers and fn_output, and passing to the method predict_multi, which will perform this task in parallel:

[13]:

dir_timeless_layers = os.path.join(data_dir, 'timeless')

dirs_layers_list = []

fn_output_list = []

for year in range(2000, 2004):

dir_time_layers = os.path.join(data_dir, str(year))

dirs_layers = [dir_time_layers, dir_timeless_layers]

fn_result = os.path.join(data_dir, f'land_cover_{year}.tif')

dirs_layers_list.append(dirs_layers)

fn_output_list.append(fn_result)

output_fn_files = landmapper.predict_multi(dirs_layers_list=dirs_layers_list, fn_output_list=fn_output_list, allow_additional_layers=True)

print('Output files:')

for output_fn_file in output_fn_files:

print(f' - {output_fn_file}')

[15:21:54] Reading 87 raster files using 5 workers

[15:22:00] 1) Reading time: 6.25 segs

[15:22:00] 1) Predicting 1000000 pixels

[15:22:00] Reading 87 raster files using 5 workers

[15:22:01] Executing RandomForestClassifier

[15:22:05] 2) Reading time: 4.77 segs

[15:22:05] Reading 87 raster files using 5 workers

[15:22:10] 3) Reading time: 5.22 segs

[15:22:10] Reading 87 raster files using 5 workers

[15:22:16] 4) Reading time: 5.62 segs

[15:22:18] RandomForestClassifier prediction time: 17.54 segs

[15:22:18] 1) Predicting time: 17.54 segs

[15:22:18] 2) Predicting 1000000 pixels

[15:22:18] 1) Saving time: 0.06 segs

[15:22:19] Executing RandomForestClassifier

[15:22:35] RandomForestClassifier prediction time: 16.10 segs

[15:22:35] 2) Predicting time: 16.11 segs

[15:22:35] 3) Predicting 1000000 pixels

[15:22:35] 2) Saving time: 0.04 segs

[15:22:35] Executing RandomForestClassifier

[15:22:51] RandomForestClassifier prediction time: 15.96 segs

[15:22:51] 3) Predicting time: 15.97 segs

[15:22:51] 4) Predicting 1000000 pixels

[15:22:51] 3) Saving time: 0.04 segs

[15:22:52] Executing RandomForestClassifier

[15:23:08] RandomForestClassifier prediction time: 16.10 segs

[15:23:08] 4) Predicting time: 16.10 segs

[15:23:08] 4) Saving time: 0.03 segs

Output files:

- /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland/land_cover_2000.tif

- /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland/land_cover_2001.tif

- /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland/land_cover_2002.tif

- /home/opengeohub/leandro/Code/eumap/docs/notebooks/eumap_data/10636_switzerland/land_cover_2003.tif

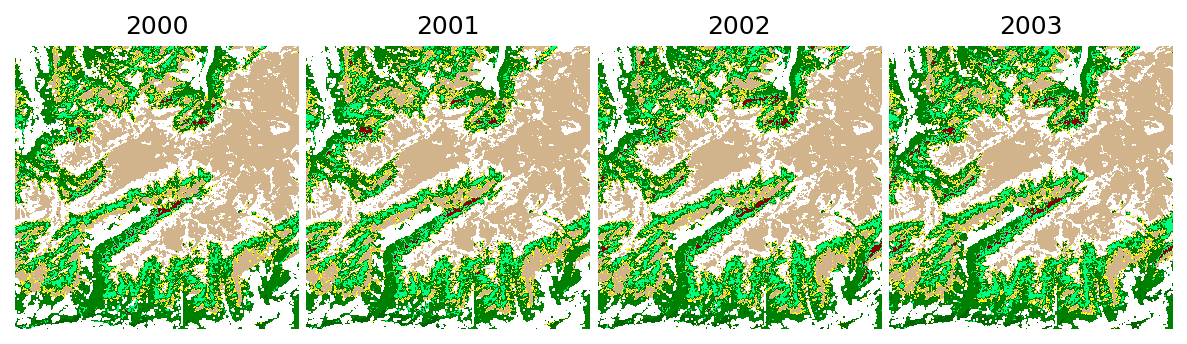

The generated geotiff files will have the following pixel values.

[14]:

import numpy as np

from matplotlib.colors import ListedColormap

lc_classes = landmapper.target_classes['original']

pixel_values = landmapper.target_classes['transformed']

colors = ListedColormap(["red", "darkred", "springgreen", "green", "darkgreen", "yellow", "tan", "white"])

print("Mapped land cover classes")

for l, p, c in zip(lc_classes, pixel_values, colors.colors):

print(f' - {l:.0f} => pixel value {p} ({c})')

Mapped land cover classes

- 231 => pixel value 0 (red)

- 312 => pixel value 1 (darkred)

- 321 => pixel value 2 (springgreen)

- 322 => pixel value 3 (green)

- 324 => pixel value 4 (darkgreen)

- 332 => pixel value 5 (yellow)

- 333 => pixel value 6 (tan)

- 335 => pixel value 7 (white)

Let’s see the the prediction results:

[15]:

from eumap.plotter import plot_rasters

titles = range(2000, 2000+len(lc_classes))

plot_rasters(*output_fn_files, cmaps = colors, titles = titles)